Linear Algebra

linear system:

equivalent ∼—same solution set

consistent—have solution; inconsistent—no solutions

coefficient matrix:

augmented matrix:

row operation

replacement

interchange

scaling

row equivalent—same after proper row operation

⇒ equivalent matrix

entry—ai**j o**r ai, j o**r A[i, j]o**r Ai, j—the (i, j) entry of A

leading entry—leftmost entry of a nonzero row

(row) echelon form—step-like, leading entry going in to the right

echelon matrix

reduced echelon form—all leading entries are 1, entries below them are 0

an echelon form (REF) of…

the reduced echelon form (RREF) of…

only 1 for each matrix

pivot position—position corresponding to the leading entry of its reduced echelon form

pivot column—the column containing a pivot position

pivot—nonzero number in a pivot position

row reduction

forward phase—make pivots the leading entries

backward phase—eliminate non-pivots in pivot column

partial pivoting—use greatest entry in a column as the pivot for programming

solve linear system

basic variable—corresponding to pivot column

free variable—not so

solution set

element(s): 0—inconsisten**t o**r 1—consisten**t o**r ∞

parametric description—

back-substitution—solve for the basic variables one by one dealing with echelon form

existence and uniqueness theorem—consistent ⇔ no rightmost column as pivot column

vector equation— x1a1 + … + xnan = b

column vector

solution for vector equation x1a1 + … + xnan = b equivalent to solution of

Spa**n{v1,⋯, vp}—subset of ℝn spanned (/generated) by v1,⋯, vp—the set of all linear combinations of v1,⋯, vp

b ∈ spa**n{a1, ⋯, an} ⇔ A has a pivot in every row

matrix equation Ax = b:

Ax = b solution equivalent to x1a1 + … + xnan = b

existence of solutions—has solution iff b ∈ Spa**n{a1, ⋯, an}

{v1, ⋯, vp} spans (/ generates) ℝm if Spa**n{v1, ⋯, vp} = ℝm

identity matrix In,

Inx = x ∀ x ∈ ℝn

homogeneous linear system—can be writen as Ax = 0

opposite: heterogeneous

at least 1 solution x = 0, a.k.a. trivial solution

nontrivial solution iff ∃free variable

parametric vector form— x**=su** + tv (s, t ∈ ℝ) parametric vector equation of the plane

solution representation

vector

parameter vector form— x = p + tv (t ∈ ℝ) the equation of the line through p parallele to v

span

solution set of Ax = b— {w|w = p**+vh} i**f ∃solutio**n p ∀solutio**n o**f Ax** = 0 vh

linear dependency of matrix columns

a1⋯an of A are linearly independent ⇐ Ax = 0 has only the trivial solution

dependent ⇐ ∃ c1⋯cn, c1a1 + ⋯ + cnan = 0

more vectors than entries in each vector ⇒ linearly dependent

linearly dependent ⇔ at least one vector = linear combination of the others

⇒ ∃vj (j > i), vj is a linear combination of v1…vj − 1 if {v1…vp} is linearly dependent AND v1 ≠ 0

contains 0 ⇒ linearly dependent

linear transformation

transformation (/ function / mapping) T : ℝn → ℝm

domain ℝn

codomain ℝm

image T(x)

range: set of all images

matrix transformation x ↦ Ax

⇔ ∀ u**,** v, c, d, T(cu + dv) = c**T(u) + d**T(v)

kernal Nul A

range Col A

matrix operations

diagonal entry—ai**i

main diagonal

diagonal matrix—square matrix with all non-diagonal entries 0

zero metrix 0

vector multiplication

A(Bx)=(A**B)x

row-column rule—

*

A is right-multiplied by B, B is left-multiplied by A

A, B commute—A**B = B**A

power of matrix

A0 is the identity matrix

transpose matrix AT

(A**B)T = BTAT

matrix inversion

invertible—for n × n matrix A, ∃ n × n matrix C, C**A = In = A**C

C: an inverse of A

(non)singular matrix—(not) invertible

2 × 2 matrix iff det A = a**d − b**c ≠ 0

∀ invertible n × n matrix A, b ∈ ℝn, Ax = b ⇒ x**=**A−1b

(A**B)−1 = B−1A−1

(AT)−1 = (A−1)T

only product of invertible matrices is invertible

for n × n matrix: A is invertible

⇔ row equivalent to In

⇔ linear transformation x**↦Ax** is one-to-one

⇔ ∀ b**∈ℝn, Ax** = b has solution

⇔ linear transformation x**↦Ax** maps ℝn ***o***nto ℝn

⇔ ∃ n × n matrix C, C**A = I

⇔ ∃ n × n matri**x D, A**D = I

⇔ AT is invertible ⇔ det A ≠ 0

⇔ columns of A forms a basis of ℝn

⇔ Col A = ℝn

⇔ 0 is not an eigenvalue of A

⇔ det A ≠ 0

invertible linear transformation T : ℝn**↦ℝn—∃ S : ℝn↦**ℝn, ∀ x ∈ ℝn, S(T(x)) = x, T(S(x)) = x

S—the inverse T−1

elementary matrix—obtained by performing a single elementary row operation on identity matrix

roo**p A = E**A, where E = roo**p Im, roo**p is the single elementary row operation

elementary matrix E is invertible, E−1 is another elementary matrix obtained by reversed row operation

n × n matrix A is invertible iff row equivalent to In

A−1 = roo**p In if In = roo**p A

find A−1—row reduce and get

LU fractorization—A = L**U

A : m × n matrix

L : m × m unit lower triangular matrix

lower triangular matrix—entries above the main diagonal are 0s

U : m × n echelon matrix

implementation—

calculation: p. 145

determinant

A is quare matrix

usually use i = 1 the first row

origin—the last pivot in echelon form of the matrix obtained without division

Ai**j—obtained by deleting the ith row and jth column in A

(i, j)-cofactor Ci**j = (−1)i + jdet Ai**j

cofactor expansion across the ith row of A—

triangular matrix A— the entries on the main diagonal

row/ column operation

replacement—same

interchange—opposite

scaling—scales the same way

echelon form U by row replacements and r interchanges

det A = det AT

det A**B = (det A)(det B) proved using elementary matrices

if A is invertible

det k**A = kndet A if A has n rows

linearity property—linear transformation where only x is a variable

Cramer’s rule—the unique solution of Ax = b has entries

A is invertible n × n matrix, b ∈ ℝ

Laplace transfrom—converts system of linear differential equations to system of linear algebraic equations

a way to find A−1—A(A−1)j = (In)j

adjugate/ classical adjoint of A—

determinant as area or volume

area of parallelogram of 2 × 2 matrix A is |det A|

volume of parallelepiped of 3 × 3 matrix A is |det A|

linear transformation T : ℝ2 → ℝ2 (ℝ3 → ℝ3) determined by 2 × 2 (3 × 3) matrix A ⇒ {are**a (volum**e) o**f T(S)} = |det A| ⋅ {are**a (volum**e) o**f S}

vector space—10 axioms

subspace—3 axioms needed

0 ∈ H

H is closed under vector addition—u + v ∈ H

H is closed under multiplication by scalars—cu ∈ H

Spa**n{vi} is a subset of vector space V if vi ∈ V

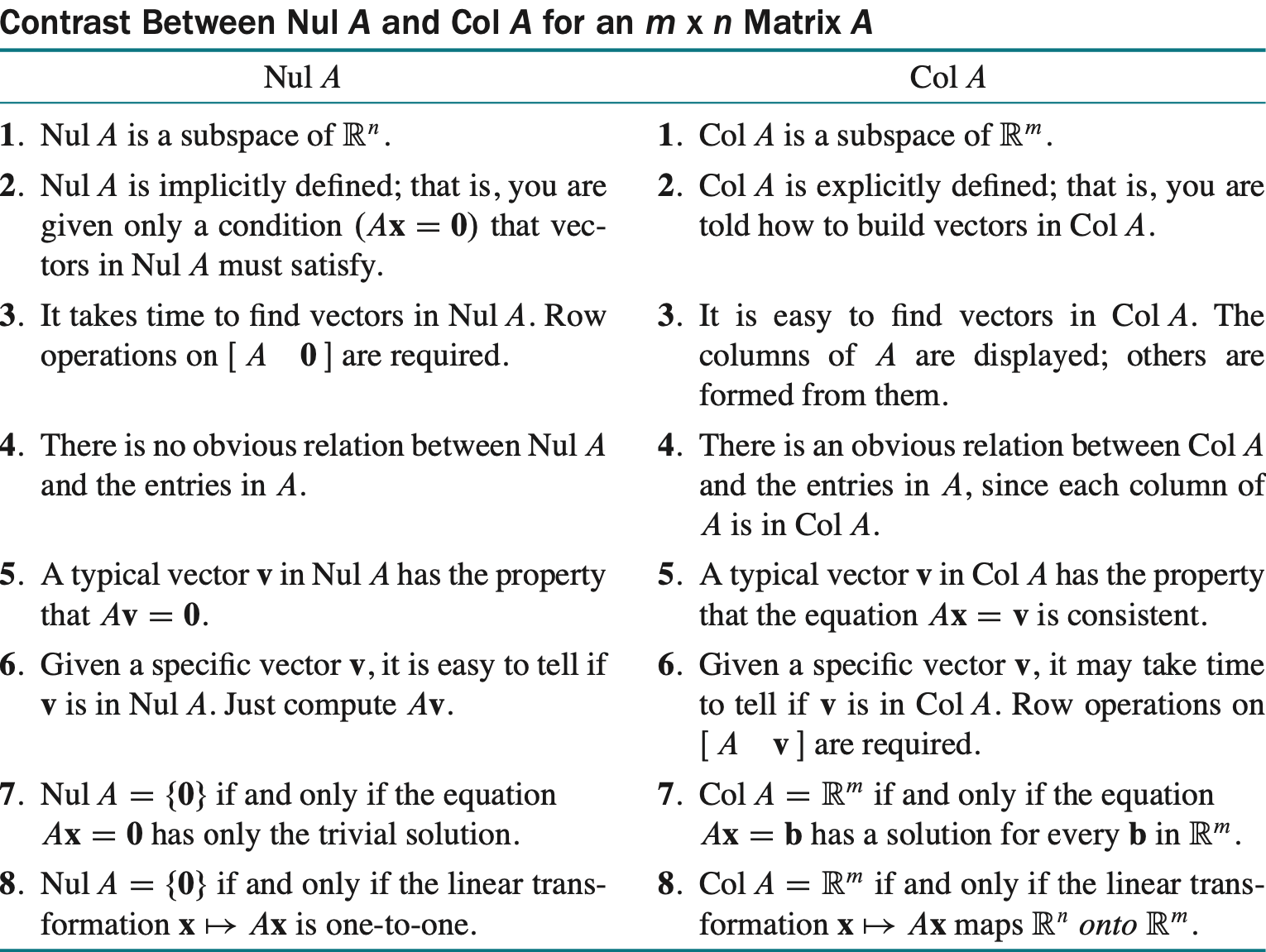

null space of A, Nul A—solution set of Ax = 0

—is a subspace of ℝn

explicit discription—with the vectors that span Nul A

basis—solve and represent with free variables

dim Nul A= number of free variables

column space of A, Col A—Spa**n{a1, ⋯, an}

—is a subspace of ℝm

Col A = {b : b**=Ax**, x ∈ ℝn}

Col A = ℝm iff ∀b ∈ ℝm, ∃x, Ax = b

basis—pivot columns

dim Col A= number of pivot columns

row space of A,

—is a subspace of ℝn

= Col AT

basis—pivot rows

kernal/ null space of linear transformation T—T(u) = 0

basis Β of subset H—linearly independent, span H

standard basis for ℝn {e1, ⋯, en}

spanning set A small AP

linearly dependent set A big AP

coordinate system

the unique representation theorem

Β-coordinates of x—scalars that map them

Β-coordinate vector of x—

coordinate mapping x ↦ [x]Β

it is a one-to-one linear transformation—isomorphism

x = PΒ[x]Β, where change of coordinate matrix PΒ = [b1, ⋯, bn]

dimension

finite-dimensional vector space—spanned by finite set

0 dimension—{0}

infinite-dimensional

dim ℙn = n + 1

rank—dim Col A

rank thm—ran**k A + dim Nul A = n

an eigenvector corresponding to λ—x ≠ 0, Ax = λx, A i**s n × n

an eigenvalue of A—λ

main diagonal entries on triangular matrix

0 is an eigenvalue of A iff A is not invertible

find eigenvector x given eigenvalue λ: Ax = λx ⇒ (A − λ**I)x = 0

(A − λ**I)T = AT − λ**I

eigenspace of A—Nul (A − λ**I)

characteristic equation of A—det (A − λ**I) = 0

⇔ λ is an eigenvalue of A

characteristic polynomial of A (with degree n)

multiplicity of eigenvalue λ—the multiplicity as root

similarity—∃P invertible, P−1A**P = B

PBP−1 = A

A, B are similar

similarity transformation—A → P−1A**P

same characteristic polynomial and same eigenvalues

diagonalize—A = PDP−1 where D is diagonal

diagonalizable—∃P, D

⇔ A has n linearly independent eigenvectors

⇔ ∑dimensions of eigenspaces = n

⇔ characteristic polynomial factors completely into linear factors

⇔ dimension of eigenspace for each λ= multiplicity

⇔ basis of all eigenspaces form a basis of ℝn

⇐ A has n distinct eigenvalues

diagonizing—find eigenvalues ⇒ D; find eigenspace basis ⇒ P; check A**P = P**D

vector operation

inner product (dot product)—u ⋅ v**=**uTv

length—

normalizing—get unit vector in the same direction as nonzero vector

distance dis**t(u**,** v) = ∥u − v∥

orthogonal relationship

orthogonal—u ⋅ v**=**0

⇔ ∥u + v∥ = ∥u∥ + ∥v∥

orthogonal complement W⊥—the set of all vectors orthogonal to subspace W

is a subset of ℝn

interactive—A⊥ = B ⇔ B⊥ = A

(Row A)⊥ = Nul A

(Col A)⊥ = Nul AT

orthogonal set—ui**⋅**uj = 0 ∀ i ≠ j

⇒ linearly independent

is a basis for the subspace

orthogonal basis—basis that is orthogonal set

weight— for y ∈ Spa**n{uj}

orthogonal projection—

where z**⊥**u

subspace L spanned by u

orthonormal set—unit vector orthogonal set

orthonormal basis—basis that is orthonormal set

Gram-Schmidt process—calculate orthonormal basis by subtracting projection

QR factorization—orthonormal basis of linearly independent matrix Q, R = QTA

orthogonal matrix—square matrix with orthonormal columns

⇔ U−1 = UT

⇔ orthonormal rows

∥Ux∥ = ∥x∥

(Ux) ⋅ (Uy) = x ⋅ y

Ux⊥Uy ⇔ x**⊥**y

product of orthogonal matrix is orthogonal matrix

orthogonal projection—

for U of orthonormal basis ui

best approximation theorem— is the closest point in W to y

general least-square problem—find x for smallest least-square error ∥b − Ax∥

⇔ normal equation—ATAx = ATb

ran**k ATA = ran**k A

Ax = b has a unique least-square solution ∀ b ∈ ℝm

⇔ A is linearly independent

⇔ ATA is invertible

Χβ**=**y

design matrix—Χ

parameter vector—β

observation vector—y

least-square line (line of regression)—y = β0 + β1x

observed value—yi

predicted value—β0 + β1xi

residual—β0 + β1xi − yi

Χβ = y where

residual vector ϵ

diagonalization of symmetric matrix

symmetric matrix—AT = A

eigenvectors from different eigenspaces are orthogonal

orthogonally diagonalizable—could have orthogonal matrix P

A = PDP−1 = PDPT

⇔ A is symmetric

spectral decomposition—

where ui are the columns of P

uiuiT is a projection matrix—pro**j{ui}x**=**(uiuiT)x

single value decomposition SVD—A = UΣVT

left singular vectors form U is m × m orthogonal

is m × n

, σ1 ≥ ⋯ ≥ σr > 0

right singular vectors form V is n × n orthogonal

∀ m × n matrix A, ATA is symmetic

its eigenvalues λ1 ≥ ⋯ ≥ λi ≥ ⋯ ≥ 0

single values—

for {v1, ⋯****,vn} with eigenvectors of ATA correponding to λ1 ≥ ⋯ ≥ λi, and A has r nonzero singular values, {Av1, ⋯****,Avr} is an orthogonal basis for Col A, ran**k A = r

find single value decomposition

find orthogonal diagonalization of ATA—P, D′

V = P to orthonomal = {vi}

D and Σ

U—