Probability and Statistics

⌊x⌋= the greatest integer smaller than x

sequence

index variable

explicit formulas

recurrence relation

arithmetic sequences

geometric sequences

bounded above/ below

(un)bounded

convergent ⇒ bounded

bounded, monotone ⇒ convergent

series

convergent vs divergent series

harmonic sum

p series:

convergent ⇒ limn → ∞an = 0

limn → ∞an ≠ 0⇒ divergent

tests

divergence test

partial sum

geometric: |q| < 1

integral: f, f(n) = an, continuous, ultimately decreasing 🡪 same

comparison:

scaling with or

alternating: |an + 1| − |an| < 0, ∀ n > n0, limn → ∞an = 0⇒ convergent

absolute convergence: convergent

conditional convergence: convergent, but divergent

convergent convergent

ratio:

root:

uniform convergence:

fn(x) converge to f(x): fn(x) → f(x) as n → ∞

∃N(ε) independent to x, ∀n > N, |fn(x) − f(x)| < ε⇔ uniform convergence: fn ⇉ f

dominated convergence: fi(x) → f(x) as x → ∞, ∃g, ∀x, i, fi(x) < g(x) ⇒ limi → ∞∫abfi(x)d**x = ∫abf(x)d**x

power series:

center a, coefficient cn

⇒ x = a⇔ convergent

or ∀x ∈ ℝ, convergent

or ∃R > 0, |x − a| < R⇒ convergent, |x − a| > R⇒ divergent

method: , radius of convergence

|x − a| < R⇒ absolutely convergent, |x − a| > R⇒ divergent from ratio test

Bessel function of the first kind:

basic formula:

plug out terms, create (x − a), see something as 1 − x, do it, range

Taylor and Maclaurin Series:

for |x| < 1

for |x| < 1

differentiation and integration: ,

may lose boundary of convergence

Cauchy product: ,

probability

sample space S: nonempty set

collection of events: subset of S: P(S)

probability measure P(A) ∈ [0, 1], P(⌀) = 0, P(S) = 1

if Ai… are mutually disjoint

symmetric difference: AΔB = (A ∖ B) ∪ (B ∖ A)

complement: Ac : = S ∖ A

disjoint: A ∩ B = ⌀ ⇔ A ⊆ Bc ⇒ A ∖ B = A ∩ Bc

De Morgan Laws: (A ∪ B)c = Ac ∩ Bc, (A ∩ B)c = Ac ∪ Bc

A = B ⇔ A ⊆ B, B ⊆ A

cardinality: |A|= # of elements in A

inclusion-exclusion principle: |A ∪ B| = |A| + |B| − |A ∩ B|, |A ∪ B ∪ C| = |A| + |B| + |C| − |A ∩ B| − |A ∩ C| − |B ∩ C| + |A ∩ B ∩ C|

partition of the sample: Bi, disjoint, S = B1 ∪ B2 ∪ …

P(A) = P(A ∩ B1) + P(A ∩ B2) + …

P(A ∖ B) = P(A ∩ Bc) = P(A) − P(B)

not necessarily disjoint: P(A1 ∩ A2 ∩ …) ≤ P(A1) + P(A2) + …

independent: P(A ∩ B) = P(A)P(B) for two events, and P(A1 ∩ … ∩ Ai) = P(A1)…P(Ai) for more events

uniform probability:

continuous uniform distribution: , [x, y] ⊂ [a, b]

combinatorics: length k, n symbols ⇒ nk

ordered subset

binomial:

generating function:

exponential generating function:

Bayes’ theorem:

random variable X(bla) = 2

discrete:

PDF probability mass/ density function: pX(x) = P(X = x)

law of total probability:

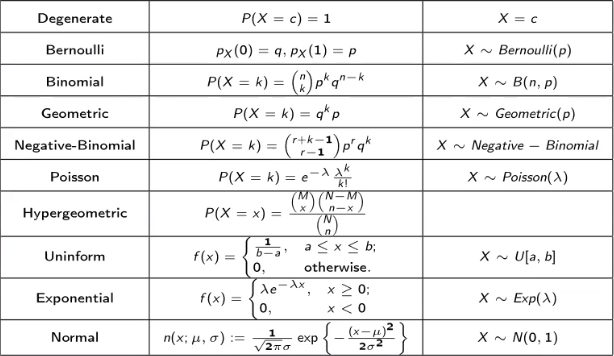

Bernoulli: pX(0) = q, pX(1) = p

Binomial:

rX(t) = (t**p + q)n

geometric: pX(k) = p**qk

negative-binomial:

Poisson:

E(X) = var(X) = λ

hypergeometric:

continuous distribution: pX(k) = 0 ∀ x ∈ ℝ,∫−∞∞f(x)d**x = 1

uniform:

exponential:

P(x ≥ t) = e−λ**t

normal:

gamma:

Γ(x + 1) = x**Γ(x)

chi-squared: Χ2(n) is the distribution of Z = X12 + … + Xn2 with Xi ∼ N(0, 1)

E(Z) = n

for z ≥ 0 if n = 1

t (or student): t(n) is the distribution of with Xi ∼ N(0, 1)

CDF cumulative distribution fun

joint distributions Y = h(X)

if X discrete, then

if X continuous, h(x) monotone where fX(x) > 0, then

marginal distribution: FX(x) = limy → ∞FX, Y(x, y)

joint probability fun

marginal PDF: table

joint density fun: f(x, y), ∫−∞∞∫−∞∞f(x, y)dxd**y = 1

marginal PDF fX(x) = ∫−∞∞fX, Y(x, y)d**y

independent

or f

continuous:

law of total prob: P((X, Y) ∈ B) = ∬BfX(x)fY|X(y|x)dxd**y

independent random variables: ∀B1, B2, P(X ∈ B1, Y ∈ B2) = P(X ∈ B1)P(Y ∈ B2)

expectation: E(X**Y) = E(X)E(Y) if X, Y independent

variance: var(X) = E((X − μx)2) = E(X2) − E2(X), var(X + Y) = var(X) + var(Y) + 2cov(X, Y)

covariance: cov(X, Y) = E((X − μX)(Y − μY)) = E(X**Y) − E(X)E(Y)

cov(a**X + b**Y, Z) = a cov(X, Z) + b cov(Y, Z)

correlation:

i.i.d. mutually independent and identically distributed

X1, …, Xn ∼ Bernoulli(p) i. i. d. ⇒ X1 + … + Xn ∼ B(n, p)

X1 ∼ B(n1, p), X2 ∼ B(n2, p) ⇒ X1 + X2 ∼ B(n1 + n2, p)

X1 ∼ Poisson(λ1), X2 ∼ Poisson(λ2) ⇒ X1 + X2 ∼ Poisson(λ1 + λ2)

generating fun: rX(t) = E(tX)

rX + Y(t) = rX(t)rY(t)

kth moment: E(Xk)

kth central moment: E((X − μx)k)

moment-generating fun:

MX(k)(0) = E(Xk)

MX + Y(s) = MX(s)MY(s) X, Y independent, work for rX(t)

uniqueness: if ∃s0 > 0, MX(s) < ∞ ∀s ∈ (−s0, s0), MX(s) = MY(s), then X, Y have the same distribution also work for r

characteristic fun: cX(s)= E(eiXs)

inequalities

Markov’s: X ≥ 0, a > 0

Chebychev’s: a > 0

Cauchy-Schwarz:

=, iff if var(Y) > 0

law of large number

sample sum: Sn = X1 + … + Xn for {Xi} i. i. d.

sample average:

WLLN weak: limn → ∞P(|Mn − μ| ≥ ε) = 0 for {Xi} same μ, var ≤ v, v < ∞, ε > 0 not necessarily i. i. d.

SLLN strong: P{limn → ∞Mn = μ} = 1 for {Xi} i. i. d.

x

central limit theorem: limn → ∞P(Zn ≤ x) = P(Z ≤ x) for {Xi} i. i. d, Z ∼ N(0, 1),

convolution: for X, Y independent, Z = X + Y

discrete:

continuous: fZ(z) = ∫−∞∞fX(z − w)fY(w)d**w

different convergence

: {Xn} converges in probability to Y, if limn → ∞P(|Xn − Y| ≥ ε) = 0

: {Xn} converges with probability 1 (almost surely) to Y, if limn → ∞P(Xn = Y) = 1

: {Xn} converges in distribution to Y, if limn → ∞P(Xn ≤ x) = P(Y ≤ x) ∀ x ∈ {x|P(Y = x) = 0}

sample

sample variance:

kth sample moment:

point estimation

the method of moments:

MLE maximum likelihood estimation: make L big as possible

maximum likelihood fun: L(θ; x1, …, xn) = p(X1 = x1, …Xn = xn|θ) or f

x1, …, xn independent:

good point estimator

unbiased: E(θ̂n) = θ

consistent:

mean squared error E((θ̂n − θ)2) is small

confidence interval: P(θ̂n− ≤ θ ≤ θ̂n+) ≥ 1 − α

⇐ P(a ≤ g(θ) ≤ b) ≥ 1 − α

N, t: let −b = a,

Χ2: let

deal with Xi ∼ N(μ, σ2)

μ = μ0:

hypothesis testing

null hypothesis H0

type I/ II error: H0 true/ false

level of significance α: probability for type I error

power of the test 1 − β, β: probability for type II error

p-value: probability that H0 is true

reject if p-value < α

for {Xi ∼ N(μ, σ02)} i.i.d., use

for two samples,

linear regression

linear least squares

correlation coefficient:

standard statistical model: Yj = β0 + β1xj + ej, j = 1, 2, …, n

E(ej) = 0, var(ej) = σ2

intercept β0, slope β1, residual ej

estimators

E(β̂0) = β0, E(β̂1) = β1

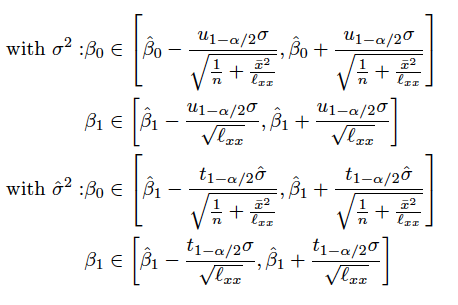

if ej ∼ N(0, σ2) i.i.d., then β̂0, β̂1 normal dist

sum of squared errors SSE:

independent of β̂0, β̂1

1 − α confidence interval for β̂0, β̂1

1 − α confidence interval for σ2

1 − α confidence interval for y0 = E(ŷ0):